Originally due to the bustle of the holidays and closing out the year, I didn't plan on participating, but a friend asked for some help on one of the challenges, and after that I couldn't stop--I was hooked and had to keep solving.

While this blog entry doesn't contain a full write-up of all the great challenges, this is only a few of the more complicated ones which I enjoyed the most.

Objective 8 - Machines can also learn to be an Elf - Machine Learning to Bypass a CAPTCHA

I loved this challenge as it taught me something genuinely new as my knowledge about machine learning was extremely limited.

We need to submit our entry for the chance to win some free cookies, but we are presented with a CAPTCHA that asks to identify three different kind of items in a time frame of 5 seconds, which, is not humanly possible.

Enter machine learning and TensorFlow! The following resources were provided to give a bit of knowledge on this topic:

Enter machine learning and TensorFlow! The following resources were provided to give a bit of knowledge on this topic:

Additionally, the challenge gives us an archive of 12,000 images, separated into categories of the different types of images, as well as a Python-based script to get us started. All the tools and necessary things are provided, it's just up to us to perform the machine training and code up the logic to handle everything to bypass the actual CAPTCHA.

First, we need to use the "retrain.py" script included in the Github repo to train the machine to recognize each of the different categories of images. To do this, we just point the script to the directory that contains all 12,000 images and let it run. Output will look similar to the following:

Next, I modified some of the code in the repo so I could just import it as a library, and then added and modified code in the half-completed script that was provided, which will quickly save all the CAPTCHA images generated to a directory, and then calls the library to analyze and predict what each image should be.

Poketrainer.py

capteha_api.py

All of the UUIDs of the predicted images are stored in a list and then submitted as a POST request for the CAPTCHA solution. Naturally, I could have likely made major improvements to this script to enhance the speed I suppose, but this ended up working nicely.

First, we need to use the "retrain.py" script included in the Github repo to train the machine to recognize each of the different categories of images. To do this, we just point the script to the directory that contains all 12,000 images and let it run. Output will look similar to the following:

Next, I modified some of the code in the repo so I could just import it as a library, and then added and modified code in the half-completed script that was provided, which will quickly save all the CAPTCHA images generated to a directory, and then calls the library to analyze and predict what each image should be.

Poketrainer.py

capteha_api.py

All of the UUIDs of the predicted images are stored in a list and then submitted as a POST request for the CAPTCHA solution. Naturally, I could have likely made major improvements to this script to enhance the speed I suppose, but this ended up working nicely.

Objective 9 - Sleighing the DB Blind - SQL Injection to Retrieve the Datas

Based on the objective text, it's quite obvious SQL injection is going to be the key here. We're presented with a web page that contains a form to submit university applications.

However, looking at the requests that take place, before every request is made to the backend, the "validator.php" file is called and a unique time-based token is generated which must be used immediately. Due to this, we need to ensure that this is generated and placed into our SQL injection payload so the request is processed.

There are two ways to do this: using a custom sqlmap tamper script or by using Burp Suite macros. I decided to just let Burp handle everything, but in either case, we are going to let sqlmap do all the heavy lifting after all is said and done.

First, I confirmed that SQL injection was possible by sending some apostrophes in the form data.

This error shows us that input placed into the name field is not sanitized and used as-is, which means we can insert arbitrary SQL queries. Now we just need to set up a Burp macro and session handling rule so we can process this through sqlmap. This is accomplished via the following steps:

Project options > Sessions tab > Macros > Add

From within the Macro Editor, the request to validator.php can be selected from the proxy history.

The Configure Item option can then be selected, and a custom parameter can be defined.

Back in the Sessions tab under Session Handling Rules, a new rule can be added, and the rule can be set to run the macro that was just created.

The options when selecting the macro should look like the following:

The scope of this rule can then be modified to include the proxy.

Now all that needs to be done is to point sqlmap to the Burp proxy, and the token generation will be handled automagically allowing for our sqlmap payloads to get through.

Allowing this to run, we can dump the entire database and we discover the "krampus" table which contained various "path" values indicating PNG images.

Browsing to these PNGs gives the answer to the objective: Super Sled-o-matic

However, looking at the requests that take place, before every request is made to the backend, the "validator.php" file is called and a unique time-based token is generated which must be used immediately. Due to this, we need to ensure that this is generated and placed into our SQL injection payload so the request is processed.

There are two ways to do this: using a custom sqlmap tamper script or by using Burp Suite macros. I decided to just let Burp handle everything, but in either case, we are going to let sqlmap do all the heavy lifting after all is said and done.

First, I confirmed that SQL injection was possible by sending some apostrophes in the form data.

This error shows us that input placed into the name field is not sanitized and used as-is, which means we can insert arbitrary SQL queries. Now we just need to set up a Burp macro and session handling rule so we can process this through sqlmap. This is accomplished via the following steps:

Back in the Sessions tab under Session Handling Rules, a new rule can be added, and the rule can be set to run the macro that was just created.

The options when selecting the macro should look like the following:

The scope of this rule can then be modified to include the proxy.

Now all that needs to be done is to point sqlmap to the Burp proxy, and the token generation will be handled automagically allowing for our sqlmap payloads to get through.

Allowing this to run, we can dump the entire database and we discover the "krampus" table which contained various "path" values indicating PNG images.

Browsing to these PNGs gives the answer to the objective: Super Sled-o-matic

Objective 10 - Elfscrow Inside and Out: Reversing and Crypto

The objective provides a Windows binary, a PDB file, and an encrypted PDF file. The goal is to analyze the binary and determine how to break the cryptography. The following resource was given, and it was very well made to learn more on this topic:

To start off, I explored the executable by running it to determine its use.

There is an insecure mode with some text hinting at insecurities, and it appears we need an ID in order to perform the decryption. As a test, I tried to encrypt a file.

The output gives a seed value, a key, and an ID. After this, I loaded the binary and the PDB into IDA to explore what is happening. From the main function we have paths to both the encrypt and decrypt functions, and exploring the "do_decrypt" function we can see that it starts by performing an internet connection check, and reaches out to the server to try and grab it. We'll come back to this, but the more important aspect is how this file is getting encrypted.

Following the "do_encrypt" function, we can see that the seed is being generated based on the time function, which is going to grab an epoch time value based on our system time.

Following this further to the super_secure_random, this shows us exactly how the key value is being generated. It is taking the state, which is our seed (epoch time value), multiplies it by 214013, adds 2531011, performs a bitwise shift, and then performs a bitwise AND operation. Using this, we can generate our own key if we know the seed.

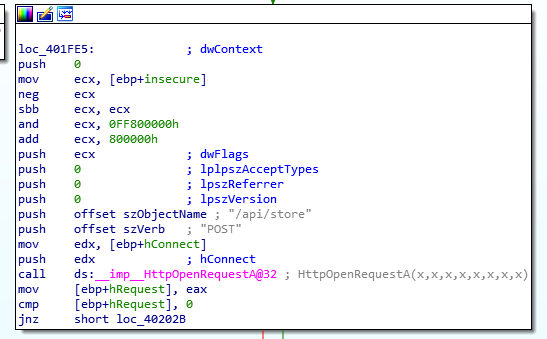

Looking at other functions present, we can also see two different API calls being made. When encrypting, a call to "/api/store" is made, which will store the encryption key on the remote server.

Additionally, when decrypting, a call to "/api/retrieve" will be made, which uses the ID value in order to retrieve a stored encryption key from the server.

Capturing the traffic with Wireshark you can see that this is only storing and retrieving the key, and the ID is really not necessary at all to perform the decryption.

As mentioned previously, the seed is being generated via an epoch time stamp. Since the objective text provides a clue that the encryption took place between a certain time, it will be possible to brute force the decryption by creating keys for that entire time range.

I decided to put together a script that would loop through the time range generating keys, and then use the binary to do the heavy lifting for the decryption; however, as seen by the output, it requires an ID not a key. My initial thought process was to use the generated key, make an API call to store it, and then it would return an ID I could use as an argument to decrypt; however, I noticed that this was generating a lot of false positives as well as other issues.

Crypto-wise, I knew it didn't really need the ID to decrypt, but there was that internet check. If the internet was not up, it would terminate the binary. To overcome this and speed up the decryption process, I decided to spin up a local Python-server listening on localhost, and modified my hosts file so any requests to the actual API would hit my server instead. The Python server would only just reflect whatever was sent to it, so it bypassed the internet check and proceeded to decrypt based on the key that was reflected back.

The following is the script used for the decryption:

Example Python server to respond to the API requests:

The following is the script used for the decryption:

Example Python server to respond to the API requests:

Running this, it takes a slight bit and there were a lot of false positive decryptions. However, setting up a monitoring script or just using the "file" command to keep an eye on things, eventually we find a valid decryption took place. Corrupted files are determined to just be "data", while our valid decryption is showing to be an actual PDF file.

Now we know that the file was encrypted on Friday, December 6, 2019 at 8:20:49 PM GMT.

Opening up the decrypted PDF, we find the answer to the objective:

Now we know that the file was encrypted on Friday, December 6, 2019 at 8:20:49 PM GMT.

Opening up the decrypted PDF, we find the answer to the objective:

0 comments:

Post a Comment

Note: Only a member of this blog may post a comment.